Implementing Data Contracts: 7 Key Learnings

What are data contracts and do they make sense for your organization?

Forward: A Data Engineering Movement Afoot?

We ran into Andrew, a team lead and senior engineer at GoCardless, at our London IMPACT event.

He talked about implementing data contracts and how GoCardless has eschewed what has started to solidify as the industry standard ELT modern data warehouse approach. This approach typically involves complex pipelines, large data dumps, and numerous in-warehouse transformations.

GoCardless’s ETL approach focuses on treating data like an API. They codify data needs in a data contract and schemas from consumers up front, and then deliver the data pre-modeled into the data warehouse.

It’s becoming clear a data engineering movement is afoot as we have recently covered other organizations taking a similar approach: Convoy and Vimeo.

You can read more about Convoy’s approach from my post with their Head of Product, Data Platform, Chad Sanderson, “The modern data warehouse is broken.”

We also have an article covering Vimeo’s approach in a case study sourced from former VP Lior Solomon’s interview with the Data Engineering Show podcast.

Is this a (back to the future as Joe Reis would call it) data engineering best practice?

Is it an alternative approach fit for a certain breed of organization? (Interestingly these three organizations share a need for near real-time data and have services that produce copious first-party event/transactional data). Or is it a passing fad?

Time will be the judge. But the emergence of this alternative approach reveals a few truths of which data leaders should note:

- Data pipelines are constantly breaking and creating data quality AND usability issues.

- There is a communication chasm between service implementers, data engineers, and data consumers.

- ELT is a double edged sword that needs to be wielded prudently and deliberately.

- There are multiple approaches to solving these issues and data engineers are still very much pioneers exploring the frontier of future best practices.

Data contracts could become a key piece of the data freshness and quality puzzle — as explained by my colleague Shane Murray in the video below — and much can be learned from Andrew’s experience implementing them at GoCardless, detailed here.

7 Key Learnings From Our Experience Implementing Data Contracts

A perspective from Andrew Jones, GoCardless

One of the core values at GoCardless is to ask why.

After meeting regularly with data science and business intelligence teams and hearing about their data challenges, I began to ask “why are we having data issues arise from service changes made upstream?”

This post will answer that question and how asking it led to the implementation of data contracts at our organization. I will cover some of the technical components I have discussed in a previous article series with a deeper dive into the key learnings.

Data quality challenges upstream

What I found in my swim upstream were well meaning engineers modifying services unaware that something as simple as dropping a field could have major implications on dashboards (or other consumers) downstream.

Part of the challenge was data was an afterthought, but part of the challenge was also that our most critical data was coming directly from our services’ databases via a CDC.

The problem with investing in code and tooling to transform the data after it’s loaded is that when schemas change those efforts either lose value or need to be re-engineered.

So there were two problems to solve. On the process side, data needed to become a first class citizen during upstream service updates, including proactive downstream communication. On the technology side, we needed a self-service mechanism to provision capacity and empower teams to define how the data should be ingested.

Fortunately, software engineers solved this problem long ago with the concept of APIs. These are essentially contracts with documentation and version control that allow the consumer to rely on the service without fear it will change without warning and negatively impact their efforts.

At GoCardless we believed data contracts could serve this purpose, but as with any major initiative, it was important to first gather requirements from the engineering teams (and maybe sell a bit too).

Talking to the team

We started talking to every engineering team at the company. We would explain what data contracts were, extol their benefits, and solicit feedback on the design.

We got some great, actionable feedback.

For example, most teams didn’t want to use AVRO so we decided to use JSON as the interchange format for the contracts because it’s extensible. The privacy and security team helped us build in privacy by design particularly around data handling and categorizing what entity owned which data asset.

We also found these teams had a wide range of use cases and wanted to make sure the tooling was flexible enough. In fact, what they really wanted was autonomy.

In our previous setup, once data came into the data warehouse from the CDC, a data engineer would own that data and everything that entailed to support the downstream services. When those teams wanted to give a service access to BigQuery or change their data, they would need to go through us.

Not only was that helpful feedback, but that became a key selling point for the data contract model. Once the contract was developed they could select from a range of tooling, not have to conform to our opinions about how the data was structured, and really control their own destiny.

Let’s talk about how it works.

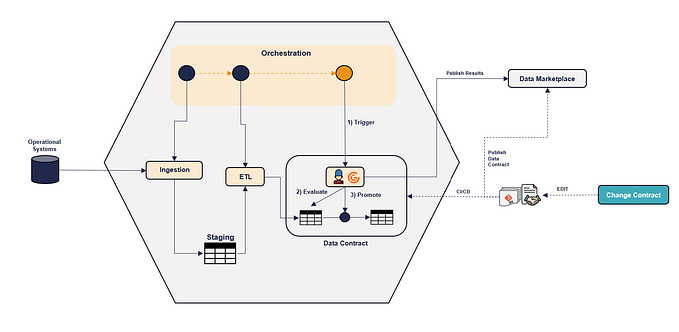

Our data contract architecture and process

The data contract process is completely self-serve.

It starts with the data team using Jsonnet to define their schemas, categorize the data, and choose their service needs. Once the Json file is merged in Github, dedicated BigQuery and PubSub resources are automatically deployed and populated with the requested data via a Kubernetes cluster.

For example, this is an example of one of our data contracts, which has been abridged to only show two fields.

The process is designed to be completely automated and decentralized. This minimizes the dependencies across teams so that if one service needs to increase the workers for a service, it doesn’t impact another teams’ performance.

The Internal Risk division was one of the first teams to utilize the new system. They use data across different features to identify and mitigate risk from potentially fraudulent behavior.

Previously they were leveraging data from the production database just because it was there. Now they have a much more scalable environment in BigQuery that they can manage directly.

As of this writing, we are 6 months into our initial implementation and excited by the momentum and progress. Roughly 30 different data contracts have been deployed which are now powering about 60% of asynchronous inter-service communication events.

We have made steady inroads working with traditional data consumers such as analytics and data science teams and plan to help migrate them over to this system as we decommission our CDC pipeline at some point in the next year.

Most importantly, our data quality has started to improve, and we are seeing some great examples of consumers and generators working together to set the data requirements — something that rarely happened before.

Key learnings

So what have we learned?

- Data contracts aren’t modified too much once set: One of the fears of this type of approach is there will be a lot of upfront work to define schemas that will just change anyway. And the beauty of ETL is that data can be quickly rearranged and transformed to fit those new needs whereas ours requires more change management. However, what we have found with our current user base is their needs don’t change frequently. This is an important finding because currently even one schema change would require a new contract and provision a new set of infrastructure creating the choice of going greenfield or migrating over the old environment.

- Make self-service easy: Old habits die hard and it’s human nature to be resistant to change. Even if you are promising better data quality, when it comes to data, especially critical data, people are often (understandably) risk averse. This is one of the reasons we made our self-service process as easy and automated as possible. It removes the bottleneck and provides another incentive–autonomy.

- Introduce data contracts during times of change: This could be counterintuitive as you might suppose the organization can only digest so much change at a time. But our best adoption has come when teams want new data streams versus trying to migrate over services that are already in production. Data contracts are also a great system for decentralized data teams, so if your team is pursuing any type of data meshy initiative, it’s an ideal time to ensure data contracts are a part of it.

- Roadshows help: Being customer obsessed is so important it’s almost a cliche. This is true with internal consumers as well. Getting feedback from other data engineering teams and understanding their use cases was essential to success. Also, buy-in took place the minute they started brainstorming with us.

- Bake in categorization and governance from the start, but get started: Making sure we had the right data ownership and categorization from the start avoided a lot of potentially messy complications down the road. While putting together the initial data contract template with the different categories and types may seem daunting at first, it turned out to be a relatively straightforward task once we had started following our roadshow.

- Interservices are great early adopters and helpful for iteration: Teams with services that need to be powered by other services have been our greatest adopters. They have the greatest need for the data to be reliable, timely and high quality. While progress with analytics and data science consumers has been slower, we’ve built momentum and iterated on the process by focusing on our early adopters.

- Have the right infrastructure in place: Simply put, this was the right time for data contracts at GoCardless. We had concluded an initiative to get all the necessary infrastructure in place and our data centralized in BigQuery. Leveraging an outbox pattern and PubSub have also been important to make sure our service-to-service communications are backed up and decoupled from production databases.

Tradeoffs

No data engineering approach is free of tradeoffs. In pursuing this data contract strategy we made a couple of deliberate decisions including:

- Speed vs sprawl: We are keeping a close eye on how this system is being used, but by prioritizing speed and empowering decentralized teams to provision their own infrastructure there is the possibility of sprawl. We will mitigate this risk as needed, but we view it as the lesser evil compared to the previous state where teams were forced to use frequently evolving production data.

- Commitment vs. change: Self service is easy but the data contract process and the contract itself is designed for the data consumer to put careful consideration into their current and future needs. The process for changing a contract is intentionally heavy to reflect the investment and commitment being made by both parties in maintaining this contract.

- Pushing ownership upstream and across domains: We are eliminating ourselves as a bottleneck and middleman between data generators and data consumers. This approach doesn’t change the core set of events that take place that impact data quality. Implementation services still need to be modified and those modifications still have ramifications downstream. But what has changed is these contracts have moved the onus on service owners to communicate and navigate these challenges and they now know which teams to coordinate with regarding which data assets.

The future of data contracts at GoCardless

Data contracts are a work in progress at GoCardless. It is a technical and cultural change that will require commitment from multiple stakeholders.

I am proud of how the team and organization has responded to the challenge and believe we will ultimately fully migrate to this system by the middle of next year–and as a result will see upstream data incidents plummet.

While we continue to invest in these tools, we are also starting to look more at what we can do to help our consumers discover and make use of the data. Initially this will be through a data catalog, and in future we will consider building on that further to add things like data lineage, SLOs and other data quality measures.

If you feel that GoCardless appeals to you and that you would like to find out more about life at GoCardless you can find our posts on Twitter, Instagram and LinkedIn.

Are you interested in joining GoCardless? See our jobs board here.

Interested in learning more about data quality? Talk to the experts at Monte Carlo.