Who owns data quality? And when?

A practical framework for how to pass the data quality baton across teams.

The most common question I hear from enterprise data teams is, “who owns data quality?”

The next most common? “Why?” and “How?”

At any enterprise, data quality isn’t just a marathon — it’s a relay. And just like any relay race, every time the baton is passed, there’s a new opportunity to drop it. Defining data ownership and how it changes is critical to successfully operationalizing a data quality strategy — but with multiple domain layers and hundreds of interconnected data products, determining who owns what usually feels more like drawing straws than reasoned decision-making.

After countless conversations with Fortune 500 data leaders facing this same challenge, I’ve determined three critical steps you can take to move your data quality strategy from half-adopted to fully operational.

Step 1: Define your most valuable data products

It’s impractical to monitor every table for every issue all the time — nor should you. Aligning around your most critical data products — whether that’s an ML model or a suite of insights — will enable you to prioritize your data quality efforts for impact.

Step 2: Differentiate between foundational and derived data products

A foundational data product is typically owned by a central data platform team (or sometimes a source aligned data engineering team), and they’re designed to serve hundreds of use cases across teams and business domains.

Derived data products on the other hand are built on top of these foundational data products. Unlike foundational data products, derived data products are designed for a specific use case and are owned by that domain-aligned data team.

For example, a “Single View of Customer” is a common foundational data product that might feed derived data products such as a product up-sell model, churn forecasting, and an enterprise dashboard.

Step 3: Divide data quality management responsibilities by domain and product type

There are different processes for detecting, triaging, resolving, and measuring data quality incidents depending on whether a product is derived or foundational.

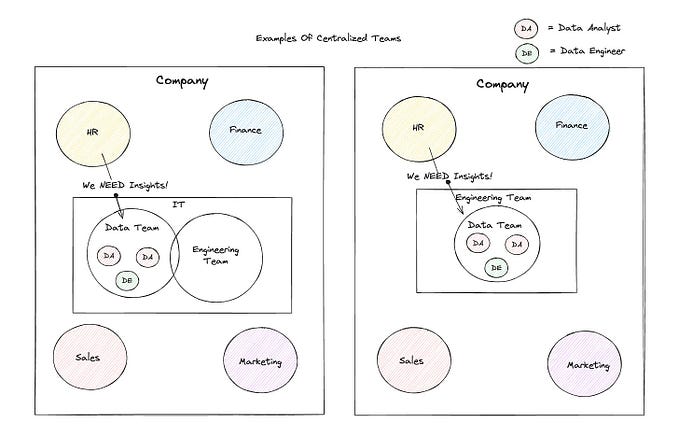

This image does an excellent job illustrating that distribution (as I would see it):

When it comes to foundational data products, it’s probably best to keep data quality ownership with a centralized data engineering or governance team. Of course, there are exceptions to any rule, but this is the approach that will make the most sense for the majority of data teams.

For your derived data products however, things get a little more complicated. In this case, ownership is often shared between a variety of functions based on where you’re at in the incident resolution process and the domain you’re supporting. Here’s how I would break this down:

- Detect: assign a domain-aligned data steward to own end-to-end monitoring and baseline data quality, and a domain-aligned analyst for data quality rules that can’t be automated or generated from central standards.

- Triage: create a dedicated triage team (often labeled as dataops) to support all products within a given domain. The data product owner will have accountability but not responsibility.

- Resolution: arm a domain-aligned data engineering team with data observability that will connect anomaly to root cause — at the data, system, and code levels of your environment.

- Measure: assign your domain-aligned data stewards to own performance measurement on the back-end based on explicit and use case-specific SLAs — for example a financial report may need to be highly accurate with some margin for timeliness whereas a machine learning model may be the exact opposite.

But remember, every data team is different. Consider the needs and abilities within your organization when defining your specific ownership strategy, and be open to considering an alternative split as it makes sense for your team Operationalizing data quality is never easy, but by bringing your domain leaders into the process with the right mix of tooling, talent, and some clear lines of responsibility, hitting your data product SLAs will be business as usual.

Want more insights? Check out this new article by Michael Segner to learn how our customers operationalize data quality across domains.

Stay reliable,

Barr